Overview

You may have experience deploying web applications on a LAMP server, which is running both the web server and database server. Hosting your entire web application infrastructure stack on a single server works well for development and light traffic sites. Eventually, you are going to start seeing an increase in user traffic and that will put a lot of stress on your once underutilized server. You are going to have to start researching how to scale to meet the demands of your web traffic.

Throughout this series of tutorials, we will build out the infrastructure to allow our application to handle high volumes of traffic. We’ll utilize web caching servers to reduce the processing work of your application, and load balancers to ensure traffic is balanced between our caching servers. The focus will be on Ubuntu 14.04, however, the concepts work on any Linux distribution.

Infrastructure Layers

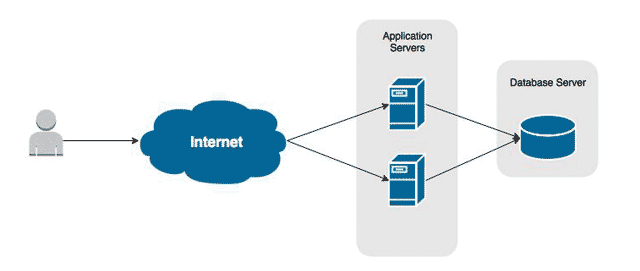

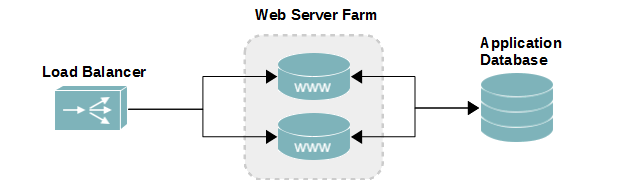

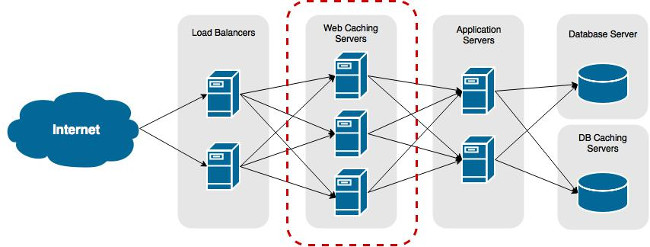

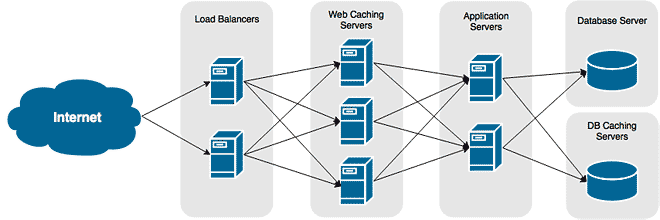

As seen in the figure below, several different layers of infrastructure work together to present your website. Most popular websites, like Facebook and Netflix, operate by using similar infrastructure components, albeit at a much larger scale with even more layers.

The example pictured is typical for most medium sized websites. Requests start at the load balancer, which spreads the load between your HTTP web caching servers. If the caching servers do not have a cached copy of the request, it is sent to the application servers to be processed. The application server than query the DB caching servers for a cached copy of the DB queries. If a cache exists, it is sent to the application server, otherwise, the application then has to query the database server.

Building the Infrastructure

Through this series of tutorials, we will learn about and deploy the various layers our of infrastructure.

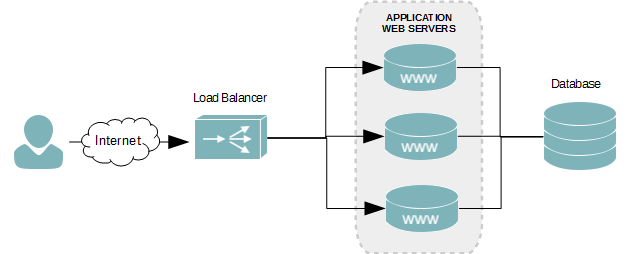

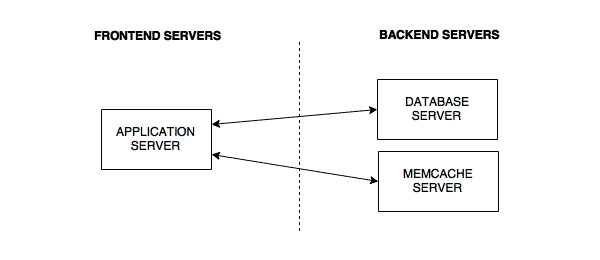

Application Servers

These are the servers that host our application. Each server will be a mirror of the others. This is crucial as you want your user experience to be the same no matter which one they may land on.

Read Part II, Application Server and Database

Web Caching Server

It’s the nature of almost every web application. There will always be content displayed by your that rarely changes. And even though it doesn’t change, your application is going to process the request for it before displaying it. That doesn’t seem very efficient and it will soon become a huge bottleneck in your infrastructure.

Adding a caching layer to your site’s infrastructure will allow frequently viewed content to be displayed without any processing. Your content is stored in a caching server’s RAM where it can be retrieved many times faster than having it processed all over again.

Read Part III, Web Caching Servers

Load Balancer

As your application grows in popularity, you will have to host it on more than one server. A load balancer allows requests to be, as its name suggests, balanced across all of your application servers. This ensures that no one server takes on all of the load.

Content Delivery Networks

Large static files like images consume a lot of bandwidth and keep connections to our servers open longer than they need to be. We can free up connections and minimize our bandwidth utilization by using Content Delivery Networks (CDN). These networks host your static files (images, stylesheets, javascript files, etc) on a matrix of servers that can cover the globe. This allows your content to be hosted closer to your users, meaning they download it much quicker.

Read Part V, Content Delivery Networks